BankWest — Pricing Tool

Transforming Mortgage Pricing Processes through User-Centered Design and Automation

Bankwest, successfully optimized its mortgage pricing request and approval processes by leveraging user-centered design, automation, and data-driven decision-making. This case study explores how Bankwest transformed its pricing processes, resulting in significant improvements in efficiency, accuracy, user satisfaction and business outcomes.

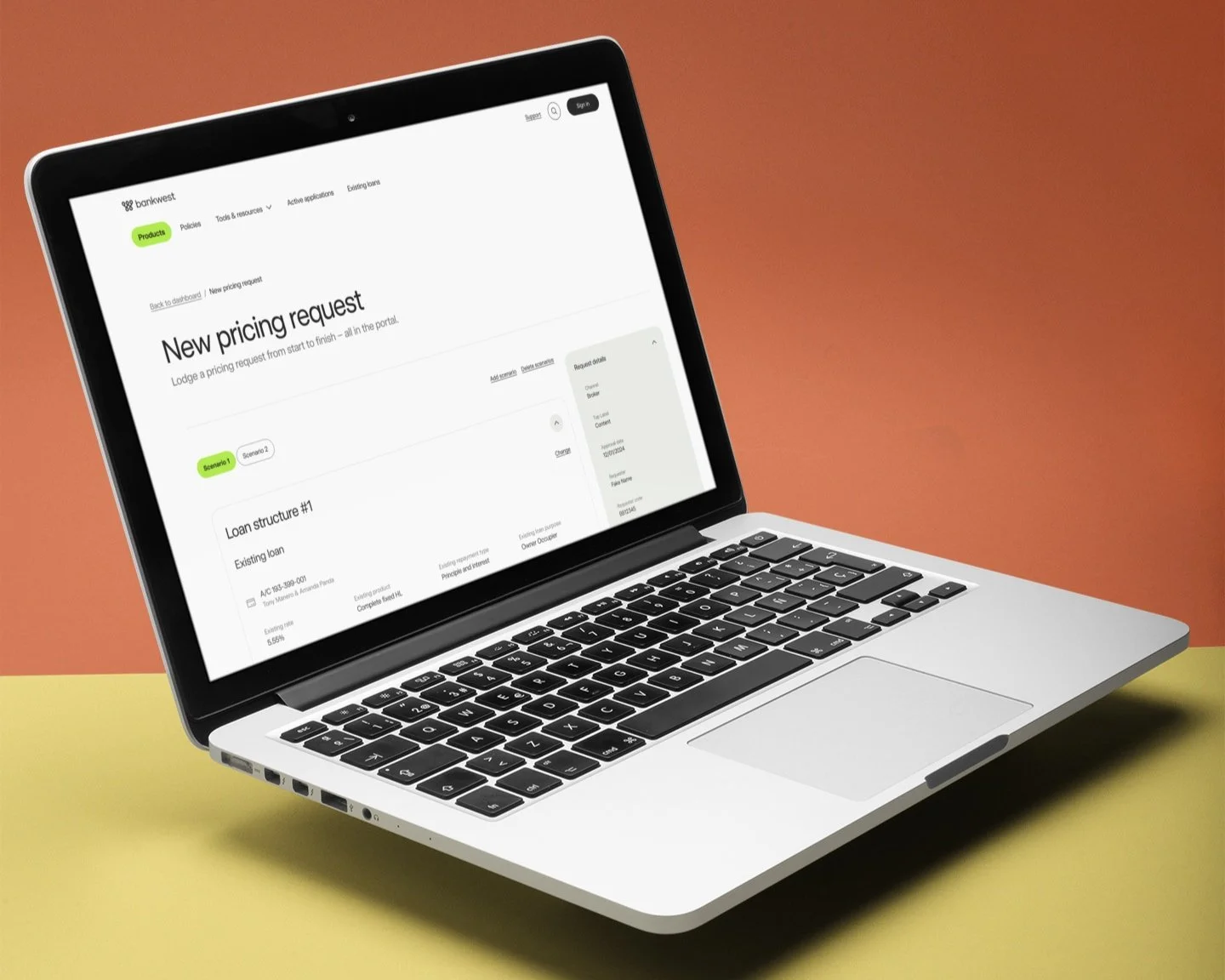

The Home Loan Pricing Tool is a product designed to facilitate the pricing request process between Brokers and Bankwest. The tool allows Brokers to submit pricing requests and escalate them to ask for better rates. An internal version of the tool is available for Bankwest teams to review and provide updated offers when a request is escalated.

Bankwest's Mortgage Support Team (MST) was facing a significant challenge in managing the high volume of pricing requests received from brokers. The current process was inefficient, with many requests being rejected due to various issues such as the customer being business-managed, in collections or hardship, or the loan being ineligible for repricing. This leads to delays, wasted effort, and a suboptimal experience for both brokers and customers.

To address these issues, Bankwest has initiated a project to redesign the Pricing Tool, focusing on streamlining the process, reducing rejections, and empowering brokers to submit high-quality pricing requests. The project aimed to leverage user research, data analytics, and machine learning to develop a more intuitive, efficient, and intelligent tool that meets the needs of all stakeholders involved in the pricing request process.

Workshops based on the Value Proposition Canvas uncovered user workflows, pains, and gains, laying the foundation for solutions that truly address their needs.

Research Methodology

The discovery phase consisted of two sequential activities: workshops and a survey, aimed at gaining a comprehensive understanding of the Pricing Tool and its users.

Workshops

The workshops were based on the Value Proposition Canvas (VPC) and included participants from various internal teams and brokers, representing different archetypes such as team leaders, experienced team members, and new ones.

During the workshops, participants were asked to:

Give a profile of themselves (persona)

Describe their workflow and tasks (Jobs-to-be-Done or JTBD)

Explore pains and gains

Discuss potential pain relievers and gain creators

The workshops were semi-structured with a script based on the VPC. A note-taker collected notes while the moderator guided the participants in the discussion, ensuring the capture of everyone's opinions. The workshops were also recorded for further analysis.

Through a MaxDiff survey, the user needs has been prioritized by identifying what mattered most and least to brokers and internal teams, ensuring data-driven design decisions.

Survey

An online survey was conducted after the workshops to collect quantitative data from a larger group of brokers and internal teams. The survey consisted of two main parts: initial profiling questions and a MaxDiff (Maximum Difference Scaling) section.

The initial profiling questions were designed to gather information about the respondents' roles, experience levels, and specific needs based on their personas. This information was used to segment the respondents into different groups, such as brokers and internal teams, and to analyze the MaxDiff results based on these segments.

MaxDiff is a powerful technique for prioritizing a list of items by asking respondents to choose the most and least important items from a subset of the full list. This process is repeated multiple times with different subsets, allowing for a robust ranking of the items based on their relative importance.

The MaxDiff survey helped to identify the most critical issues and desired features for the Pricing Tool from the perspective of both brokers and internal teams. By augmenting the MaxDiff results with the persona-based segmentation from the initial profiling questions, the survey provided a more nuanced understanding of the priorities and needs of different user groups.

Grounded in user insights and aligned with business goals, the discovery phase combined qualitative workshops, persona-driven surveys, and benchmarking to uncover challenges and refine pricing solutions.

Business Dashboard Benchmarking

In addition to the survey, benchmarking data was collected from the business dashboard to establish baseline metrics for key performance indicators (KPIs) such as queue size, response times, and SLA compliance. This data provided a quantitative understanding of the current state of the pricing request process and helped set targets for improvement.

By combining qualitative data from the workshops, quantitative data from the persona-augmented MaxDiff survey, and benchmarking data from the business dashboard, the discovery phase aimed to provide a comprehensive understanding of the needs, challenges, and preferences of brokers and internal teams, as well as the current performance of the pricing request process. This holistic approach ensures that the proposed design solutions are grounded in user insights, aligned with business objectives, and measurable against established benchmarks.

Findings

The research revealed several key findings related to the current pricing request process and the challenges faced by the internal teams:

High volume of requests and growing queue size. The internal teams' queues have grown significantly, indicating a substantial increase in the volume of incoming requests. This puts pressure on the teams to action requests quickly and efficiently. The teams have a 48-hour SLA to respond to brokers for requests within their approval authority. If escalation is needed, an additional 48 hours is added, potentially leading to a total response time of up to 4 business days. Delays in responses could negatively impact broker and end customer experience.

Manual effort wasted on declining ineligible requests. The teams spend significant time and effort manually declining pricing requests that are ineligible based on current business rules. These include requests for business-managed customers, customers in collections or hardship, construction loans, customers who have already accepted a pricing offer directly with the bank, fixed-rate loans outside the eligible repricing window, and resubmitted requests with no change in details.

Usability and navigation challenges. The pricing tool's user interface lacks clarity, visual hierarchy, and intuitive navigation, hindering the teams' productivity and user experience.

An automated rule-based filtering system saves time by blocking ineligible pricing requests at the source, streamlining workflows and reducing manual effort.

Design Solutions

Based on the research findings, prioritization from the MaxDiff survey, and benchmarking data, the following design solutions were proposed:

Implement an automated rule-based filtering system. Develop a system that automatically filters out ineligible pricing requests based on predefined business rules. This system should block requests from being submitted if they meet any of the ineligibility criteria, such as the customer being business-managed, in collections or hardship, or the loan being for construction. By blocking these requests at the source, the internal teams can save significant time and effort that is currently wasted on manually declining them.

Intelligent request type determination. The existing pricing tool requires brokers to select the type of pricing request (transfer, repricing, or purpose change). However, brokers often choose the wrong type, leading to incorrect requests and inefficiencies. To address this issue, the new design allows brokers to select the characteristics of the loan based on their preferences, such as the loan amount, LVR, and loan purpose. The system then intelligently determines the appropriate request type based on the provided parameters. This approach eliminates the need for brokers to manually select the request type and reduces the risk of errors, ultimately streamlining the pricing request process and improving accuracy.

Customer tags and status indicators. To provide internal teams with quick and easy access to crucial customer information, the redesigned Pricing Tool incorporates customer tags and status indicators. These tags highlight important aspects of the customer's profile, such as whether they are in hardship, business-managed, or have other special considerations. By prominently displaying these tags within the tool, team members can quickly identify and address pricing requests that may require additional attention or follow specific protocols. This feature enhances the team's ability to provide personalized service and ensures that sensitive customer cases are handled appropriately.

Redesigned information architecture brings key loan details together, streamlining workflows and enabling faster, informed decision-making.

Redesigned information architecture. The information architecture (IA) of the Pricing Tool is redesigned to ensure that related data points are grouped together logically, making it easier for users to find and process the information they need. Key loan details, such as loan size and LVR, are now presented in a cohesive manner, allowing team members to quickly assess the loan's characteristics. Additionally, the redesigned IA incorporates loan fixed rate expiry and interest-only (I/O) end dates, providing the team with a comprehensive view of the loan's timeline and enabling them to make informed decisions. By having all the required information in one place, the team can work more efficiently and effectively, reducing the need to navigate through multiple screens or systems to gather the necessary data.

Advisory feedback loop: An advisory feedback loop is implemented for brokers during the "Generate" and "Review" stages of the pricing request process. As brokers fill out the request details, real-time validation is performed, and guidance messages are displayed for fields that may trigger a rejection by MST. These potential issues are summarized in the "Review" stage, allowing brokers to edit the request or submit it with awareness of the advisories. This proactive education helps brokers learn the criteria for successful requests, reduces avoidable rejections, and improves the overall efficiency of the pricing request process.

Scenario creation and comparison empower brokers to optimize pricing requests and save time by reducing revisions and resubmissions.

Scenario creation and comparison: The redesigned Pricing Tool introduces a scenario creation feature that allows brokers to generate and compare multiple pricing request drafts for the same customer within the "Generate" and "Review" stages. This feature enables brokers to experiment with different combinations of loan terms, rates, and products to identify the most suitable and competitive offer for their customer. The ability to compare scenarios side-by-side empowers brokers to make data-driven decisions, optimize their pricing requests, and provide a higher level of service to their customers. Additionally, this feature saves time for both brokers and MST by reducing the number of back-and-forth interactions and minimizing the need for revisions and resubmissions.

These design solutions work together to create a more intuitive, informative, and streamlined Pricing Tool that empowers both brokers and internal teams to navigate the pricing request process with ease and accuracy. By incorporating customer tags, status indicators, and a redesigned information architecture, the tool provides users with the right information at the right time, enabling them to make informed decisions and deliver exceptional service to their customers.

User Stories

To ensure that the proposed design solutions effectively address the identified issues and meet the needs of all stakeholders, a comprehensive validation process was conducted. User stories were created based on the insights gathered from the workshops and prioritized using the results of the MaxDiff survey. These user stories served as the foundation for mapping out the business rules, core functionality, and information architecture of the enhanced Pricing Tool.

Design Cycles

Four-week cycles began after prioritizing user stories, focusing on critical aspects of the customer journey to address key pain points effectively. Each cycle followed a structured process:

Ideation: Brainstorming and developing solutions tailored to identified issues.

Validation: Testing ideas with users to refine them.

The iterative nature of the process allowed the team to dive deep into design details and incorporate learnings from validation sessions in subsequent cycles, creating a continuous improvement process. Weekly meetings with the development team ensured alignment, and each cycle concluded with a stakeholder showcase to foster consensus and secure sign-off before progressing.

Validation combined user testing with advanced surveys, including heat-mapping and A/B testing, to refine designs and ensure optimal user efficiency.

Prototypes of the redesigned tool were then developed, incorporating the prioritized features and improvements. These prototypes were used in validation sessions and speed critique sessions with stakeholders, brokers, and subject matter experts (SMEs).

Following these user testing sessions, we developed a sophisticated Qualtrics survey that transformed our initial observations into quantifiable data.

The survey was designed to dig deeper into the insights gained from direct user interactions, using advanced features like heat-mapping to capture nuanced user feedback. A/B testing within the survey helped to compare different design approaches and understand their impact on user efficiency.

Results

The implementation of the proposed solutions, along with the insights gained from user experience mapping, heuristic review, and usability testing, yielded significant benefits for Bankwest. The following metrics demonstrate the measurable improvements achieved:

Processing Time

-67%

From 30 to 10 minutes

Manual Interventions

-80%

From an average of 25 an average of 5 manual interventions were required per 100 pricing requests.

Pricing Decision Accuracy

+13%

From 85% to 98%

User Satisfaction

+40%

From 3.2 out of 5 to 4.5 out of 5

Productivity

+60%

Before. The MST and HLD teams could process an average of 50 pricing requests per day.

After. The MST and HLD teams could process an average of 80 pricing requests per day.

These measurable improvements demonstrate the success of the optimization project in reducing manual effort, minimizing errors, enhancing accuracy, improving user satisfaction, and increasing overall productivity. The metrics provide clear evidence of the tangible benefits achieved through the implementation of the proposed solutions.

NEXT PROJECT